Quantum: The Regulatory Frontier That Will Catch Us Off Guard

Quantum computing is revolutionizing medical device development, but regulatory frameworks aren't ready. Companies like Algorithmiq are achieving 100x precision improvements in cancer therapy using quantum-generated synthetic data—yet FDA's 2025 AI/ML guidance doesn't address quantum validation challenges. How do you validate data you can't reproduce classically? Regulatory science needs quantum-aware frameworks now, before quantum-AI medical devices reach the clinic.

Yesterday, I met Sabrina Maniscalco, CEO of Algorithmiq, at the Italian Tech Forum in Zurich. They develop quantum algorithms for life sciences, including applications in cancer therapy. Despite having taken two courses in quantum physics at university and working extensively with AI/ML medical devices, I found myself needing to educate myself from scratch on what quantum computing means for our field.

What I discovered was both inspiring and unsettling: we're standing at the edge of a paradigm shift in medical technology, and regulatory science isn't even looking in that direction yet.

What Is Quantum Computing and Why Does It Matter?

Classical computers operate using bits, each either 0 or 1. Quantum computers use qubits that can exist in multiple states simultaneously through superposition. Qubits can also be entangled, meaning their states are interconnected regardless of distance. This allows quantum computers to process vast possibilities in parallel in ways classical computers simply cannot replicate.

For medtech, this isn't academic curiosity—it's transformative capability. Classical computers struggle to simulate even simple molecules beyond a certain size. A molecule with just 30 atoms has more quantum states than a classical computer can practically track.

Quantum computers can model:

Drug-molecule interactions at atomic precision

Protein folding and three-dimensional structures

Tumor microenvironments at cellular and molecular levels

Personalized treatment responses based on genetic profiles

The AI-Quantum Hybrid Approach

During our conversation, Sabrina explained: "AI is only as good as the data it's trained on. With quantum computing, we can generate vast amounts of physically accurate data and then train AI on it. Imagine the possibilities from simulating the behavior of ALL atoms in our body."

This is Algorithmiq's innovation: combining quantum computing with AI to address one of AI's fundamental limitations—the need for massive training data.

For many biological phenomena, we simply don't have enough experimental data. It's too expensive, too dangerous, or physically impossible to measure. Quantum computers can generate synthetic training datasets that are physically accurate—based on quantum mechanics—but impossible to obtain experimentally.

Sabrina mentioned that quantum computing capabilities are now available on the cloud, with enterprise access in the million-dollar range annually. For specific computational problems, quantum computers can be more efficient than traditional supercomputers.

Algorithmiq has already announced partnerships with Microsoft (December 2024) and Quantum Circuits (February 2025) to accelerate drug discovery.

A Concrete Example: Photodynamic Cancer Therapy

One particularly compelling application demonstrates quantum computing's real-world impact: photodynamic therapy (PDT) for cancer.

PDT uses special molecules called photosensitizers that are activated by light to produce therapeutic effects. The benefits are significant:

No long-term side effects

Less invasive than surgery

Outpatient procedure

Precisely targeted

Can be repeated at the same site (unlike radiation)

5-10 times less costly than other cancer treatments

The challenge lies in designing these photosensitizer molecules. It requires understanding tiny energy gaps between electronic states—differences that dictate how molecules behave when exposed to light. Classical quantum chemistry algorithms struggle to calculate these energy gaps with the necessary accuracy.

Using IQM's Emerald quantum processing unit and Algorithmiq's advanced error mitigation techniques, the team achieved a 100x improvement in precision compared to results from other quantum hardware providers. This work, part of the Wellcome Leap Q4Bio Challenge, is establishing an end-to-end quantum-centric drug discovery pipeline for light-activated anti-cancer drugs.

They're focusing on the BODIPY class of compounds—next-generation photosensitizers. With quantum computing, simulating their energy landscape becomes possible with unprecedented accuracy, paving the way for better-targeted therapies developed faster and more cost-effectively.

This is happening now.

Closing Health Data Gaps

We also discussed possibilities that particularly resonate with my work in femtech: using quantum computing to simulate complex biological systems like women's physiology to close health data gaps that are difficult or impossible to obtain experimentally.

Women's health research has historically been underfunded. Menstrual cycles, pregnancy, menopause—these introduce biological complexity that makes clinical trials more expensive and results harder to interpret. What if quantum simulation could help bridge these gaps by modeling hormonal interactions and reproductive system responses with atomic-level precision?

The Regulatory Challenges Ahead

Here's the uncomfortable truth: none of medtech's regulations or guidances currently contemplate quantum-AI hybrid diagnostics or therapeutics.

Challenge 1: The Validation Paradox

How do you validate quantum-generated data when you can't reproduce it classically?

The FDA's recent draft guidance on AI/ML-enabled device software (January 2025) requires manufacturers to disclose synthetic data provenance, describe algorithms used to generate it, and demonstrate it preserves clinical correlations. These are sensible requirements for classically-generated synthetic data.

But they break down when the "algorithm" is a quantum computer simulating physics that classical systems fundamentally cannot reproduce. How do you verify quantum-generated molecular data "preserves clinical correlations" when there's no classical ground truth? The entire point of quantum computing is simulating phenomena classical computers cannot.

Challenge 2: Black Box Squared

AI is already a "black box"—how do we maintain our ability to explain and reproduce the operating principles when we layer quantum computing on top?

Explainability is already a regulatory challenge. The EU MDR Article 61 and FDA guidance emphasize transparency in clinical decision-making. But AI models, particularly deep learning, are notoriously opaque.

Add quantum computing—inherently probabilistic, extraordinarily sensitive to environmental interference—and we're layering one form of opacity on another. Yet regulatory frameworks require that medical devices be explainable, reproducible, and transparent.

The FDA's three-pillar framework for Software as a Medical Device asks:

Is there a valid clinical association between device output and clinical condition?

Does the software correctly process input data?

Does use of the output achieve the intended purpose?

For quantum-AI systems, how do you analytically validate "correctness" when there's no classical benchmark?

Challenge 3: Cybersecurity and Q-Day

Quantum computers will eventually break current encryption methods—a threat called "Q-Day." This poses serious risks:

Adversaries can collect encrypted medical data today and decrypt it later

Medical devices relying on current cryptographic protocols will be compromised

NIST announced its fifth quantum-safe algorithm in March 2025, but adoption in medical devices has been slow. Medical device manufacturers should implement quantum-resistant encryption immediately.

What Regulatory Frameworks Currently Exist?

The closest we have are two recent FDA guidances:

FDA Guidance on Real-World Evidence (December 2025) emphasizes that data must be relevant and reliable, with a fit-for-purpose approach. This potentially opens a pathway: quantum-generated synthetic data could be acceptable if manufacturers demonstrate it's the most appropriate method for answering specific clinical questions.

FDA Guidance on AI/ML-Enabled Device Software (Draft, January 2025) addresses data management, synthetic data requirements, performance validation—but all assuming classical computational paradigms.

Neither contemplates quantum-generated training data, validation when classical reproduction is impossible, or uncertainty quantification for quantum probabilistic outputs.

The EU AI Act, MDR/IVDR, and ISO standards similarly don't address quantum computing.

Why This Matters Now

Waiting until quantum devices reach the clinic or reach their "ChatGPT moment" means we'll be reactive instead of proactive, again.

We've seen this pattern with AI. By the time ChatGPT brought AI to mainstream awareness, the technology had been developing for decades. Regulators scrambled to catch up.

But AI builds on classical computing principles we already understood. Quantum computing is fundamentally different. The learning curve is steeper, the validation challenges more complex.

If we wait until a quantum-enhanced diagnostic applies for FDA clearance before starting these conversations, we'll be years behind.

So, what needs to happen?

Regulatory agencies may:

Introduce quantum-aware terminology in guidance documents

Establish working groups bringing together quantum scientists, device developers, and regulatory professionals

Develop validation frameworks specifically for quantum-generated synthetic data

Issue guidance on quantum-resistant cybersecurity for medical devices

Industry may:

Engage early with regulators through pre-submission meetings

Document quantum approaches in detail

Build quantum literacy within regulatory and quality teams

Implement post-quantum cryptography now

Thank you to Sabrina Maniscalco for the thought-provoking conversation, and to Camera di Commercio Italiana a Zurigo for creating the space where these insights happen.

References

FDA Software as a Medical Device (SaMD): Clinical Evaluation

FDA guidance: “Use of Real-World Evidence to Support Regulatory Decision-Making for Medical Devices”

FDA guidance: "Software as a Medical Device (SAMD): Clinical Evaluation"

Methodology Note: This article is based on my original LinkedIn post, reflecting my professional experiences and personal perspectives. Claude AI assisted in elaborating the post into a broader article by integrating personal notes, literature research, fact-checking and deeper insights on the topic. All analysis and regulatory perspectives are my own, and all content has been reviewed by me for accuracy.

PCCP beyond AI

Very exciting trend of femtech apps integrating with wearable data! How does this work for the regulated ones? I wanted to share this clever use of PCCP from Natural Cycles° from last year which impressed me.

What's PCCP?

Pre-determined Change Control Plan is a regulatory instrument devised by FDA - as a European is I'm most jealous of. It was designed to enable AI devices, which by design need to be able to evolve their accuracy in the field, getting smarter the more data they acquire. Traditionally, any change to the accuracy and performance of a device required a regulatory resubmission (still the case in EU) and up to 90 days of review wait.

With PCCP you can get pre-approval for a reasonable range of performance that you anticipate and accept.

What I found clever, is that Natural Cycles°, the pioneer of regulated fertility awareness, used PCCP not for AI changes but for variability of source data from different wearables.

While, as far as I'm aware, they currently integrate only with ŌURA and Apple Watch, this clears the way for them to swiftly add any more integrations to their conception/contraception suite as long as they fit their predefined specs (see table in pdf).

This is an example of how:

1️⃣ Regulatory instruments that are smart and abreast with the times enable even more innovation than what they primarily intended to,

2️⃣ Femtech is riding the wave of biomarkers ensuring most users can be served irrespective of which devices they choose - it's not just the iOS vs Android divide anymore!

3️⃣ Scientific research and clinical partnerships will see an incredible boost of opportunity from all this data, finally compensating for the lack of data that we know womens health has suffered until now!

What else could we use PCCP for? And until when can we have a similar toolkit in Europe under MDR? 🫠

NC's current integrations here

Link to full 510k summary here

LLM for Quality tasks

A short story on using AI for a QARA task and coming up with a framework for doing it faster (4h down to 1h) while keeping it under control.

Task at hand:

Client received its inspection report from the authority via the post in the national language and needed it digitalised and in English in order to action it.

1️⃣ Convert scanned pdf to electronic document

ChatGPT 👎 didn’t identify text in the scanned pdf.

Gemini and NotebookLM did it, but I was unconvinced by the accuracy 🧐 .

GoogleDrive did the job, uploaded the pdf and "Open as GoogleDoc". ✅

2️⃣ Translate electronic document

ChatGPT and Gemini kept hallucinating badly 😵💫 .

The "Translate document" function of GoogleDocs returned a poor literal translation 🥴 .

NotebookLM was accurate but skipped content 😥 .

Ended up doing section by section via Gemini's in-text "AI Refine" function with a very meticulous prompt and checking it manually in a side-by-side table 🥵 .

3️⃣ Format electronic document similar to the original

ChatGPT and NotebookLM didn’t work 🤕 .

Gemini could do some basic improvements via the in-text "AI Refine" function, but not via the GoogleDocs built-in "Ask Gemini" nor via the browser chat. Interesting how much these differ in capability.

In the end, the formatting fix was mostly manual 🤯 .

Conclusion:

After 4 miserable hours spent on the task with many failed attempts and much too manual input, I achieved a satisfactory document.

But, I still wanted to get to the bottom of this. There must be a better way??

So I restarted from scratch using a different approach, which I could summarise in a way that is inspired by the concept of the PDCA / Agile cycle we use in Quality:

⤵️ Plan: Ask AI for the right tools and prompts to achieve your goal. And importantly, "ask AI to ask you" questions or point out what is unclear in order to help you refine your requirements accurately.

▶️ Do: Approach it step by step. Run your refined prompt for your SUBtask in your selected tool. Quick review of the output, refine the prompt. Change tool if needed.

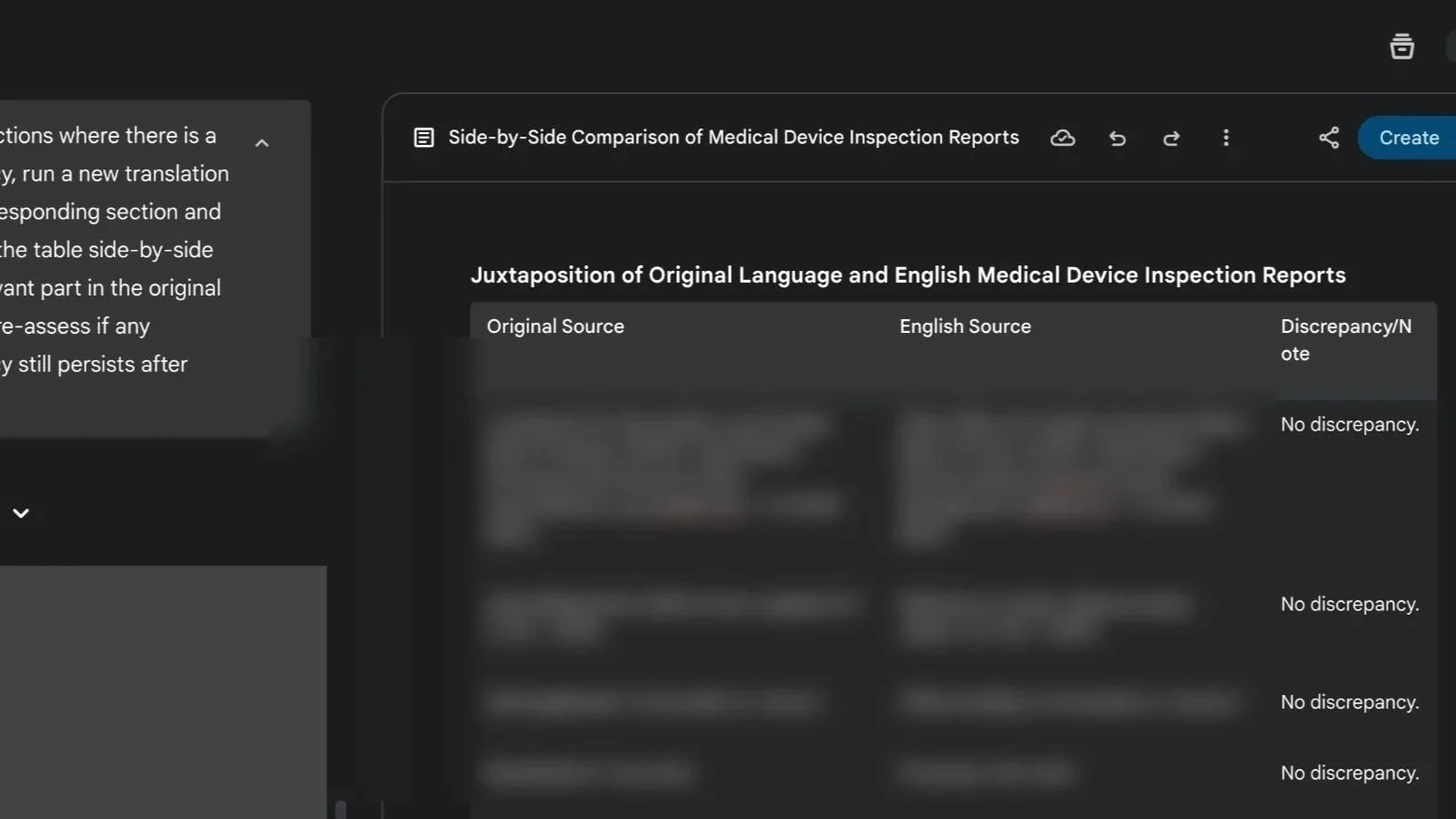

⏯️ Check: Get AI to verify its results and to help you check it manually by highlighting any discrepancies. For example, “juxtapose the original and translated content in a table section by section and note any discrepancies between the two version of the text”.

🔁 Act: Tell AI to correct the discrepancies, then re-run the verification step to update results.

Eventually, by doing it this way, I could achieve the same result in 1h and with increased confidence on the accuracy. Still not extremely fast, but considerably faster!

I am curious, how would others have approached this dull task?

EU AI Act deployment

Since August 2nd the EU AI Act is in force. But is it?

In practice: not much today, but the clock has started. If your device includes an AI component or uses AI to support decisions it’s time to take a closer look.

For high-risk systems, including many AI-based medical devices, there’s a 36-month transition to comply, i.e. phased implementation. However, some provisions apply earlier (e.g. banned uses of AI, codes of conduct).

Here’s what I see across medtech:

1. Confusion around scope and classification, e.g. AI as a tool for CSV or as part of the intended use?

2. Assumptions that MDR = AI Act compliance, thus reactive attitude to QMS updates upon NB feedback rather than in a proactive manner

3. Teams don't know how to resource it.

Good thing is that I also see a booming AI-related offering from QARA consultants and training providers which can help if you’re stuck on any of the above points. Cool examples (among many others):

• AI-first QARA frameworks and training e.g. Johner Institut GmbH https://lnkd.in/dBSuFfie,

• AI agents for compliance-checking and even FDA review outcome prediction such as Lexim AI or Acorn Compliance,

• GenAI embedded in eQMS tools such as Formwork from OpenRegulatory or Matrix One

What would help your team implementing the AI Act? Curious to hear your challenges and to help you with the right support.